Yongyuan Cheryl Liang My research focuses on developing foundation models and intelligent agents.

I actively explore both theoretical frameworks and empirical findings, with specific research interests in:

Large Multimodal Models : Large multimodal models for virtual and embodied agentic tasks.Cross-modal Reasoning : Cross-modality alignment and reasoning mechanisms for true omnimodal generation.

In the previous few years, I have worked on Reinforcement Learning, Representations and Robustness.

I join UMD CS as a PhD student, advised by Furong Huang .

I was fortunate to work with Jianwei Yang and Huazhe Xu .

I conducted research at NVIDIA, Adobe, and Microsoft Research. I received my B.S. degree in Mathematics from Sun Yat-sen University.

If you’re interested in my research, potential collaborations, or simply want to catch up, feel free to drop me an email.

News

Feb' 26

Three papers to appear in CVPR 2026 (2 main track and 1 findings).

Feb' 26

We drop SAW-Bench and release a new blog post about Spatial Modeling.

Jan' 26

MomaGraph selected as Oral presentation (1%) in ICLR 2026.

Jan' 26

One papers to appear in ICRA 2026.

Jan' 26

Two papers to appear in ICLR 2026 (ROVER and MomaGraph ).

Sept' 25

One paper to appear in NeurIPS 2025.

Feb' 25

Magma to appear in CVPR 2025.

Jan' 25

Two papers to appear in ICLR 2025.

Jan' 25

Start to update Awesome-Generalist-Agents .

Sept' 24

Make-An-Agent to appear in NeurIPS 2024.

June' 24

ACE selected as Oral presentation (1%) in ICML 2024.

May' 24

Two papers to appear in ICML 2024.

Jan' 24

Three papers to appear in ICLR 2024, including two spotlights and one poster.

Selected Publications and Preprints

Filter by:

show selected /

show all by date /

Large Multimodal Model /

Reinforcement Learning /

Other Topics

* denotes Equal Contributions and Project Lead; † indicates Equal Advising.

Embodied Large Multimodal Model

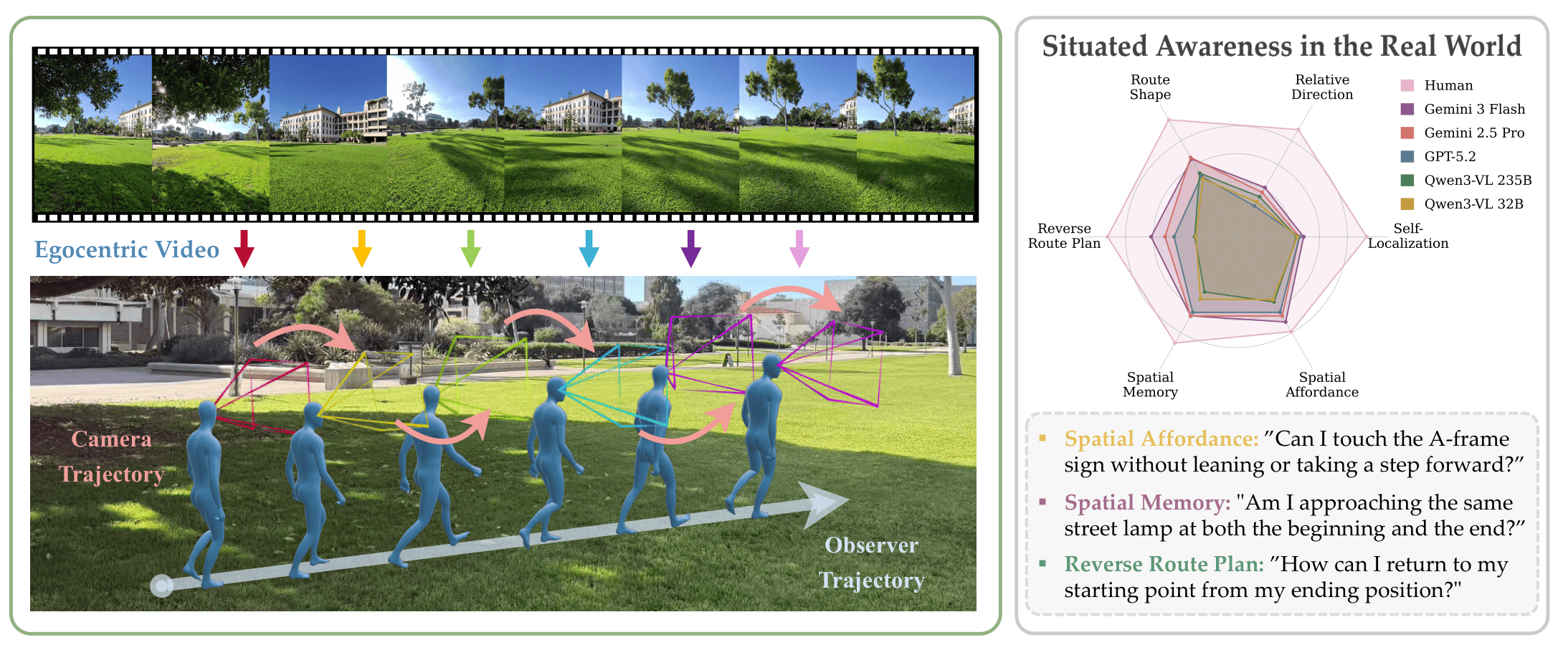

Learning Situated Awareness in the Real World

Yongyuan Liang , Rajiv Dhawan, Jiajun Wu, Ming-Hsuan Yang, Xin Eric Wang

arXiv , 2026Project Page /

Paper /

Benchmark /

Twitter

Embodied Large Multimodal Model

MomaGraph: State-Aware Unified Scene Graphs with Vision-Language Models for Embodied Task Planning

Yongyuan Liang* , Yen-Jen Wang*, Gireesh Nandiraju, Yuanliang Ju, Seungjae Lee, Qiao Gu, Elvis Hsieh, Furong Huang†, Koushil Sreenath†

ICLR Oral Project Page /

Paper /

Code /

Benchmark /

Twitter

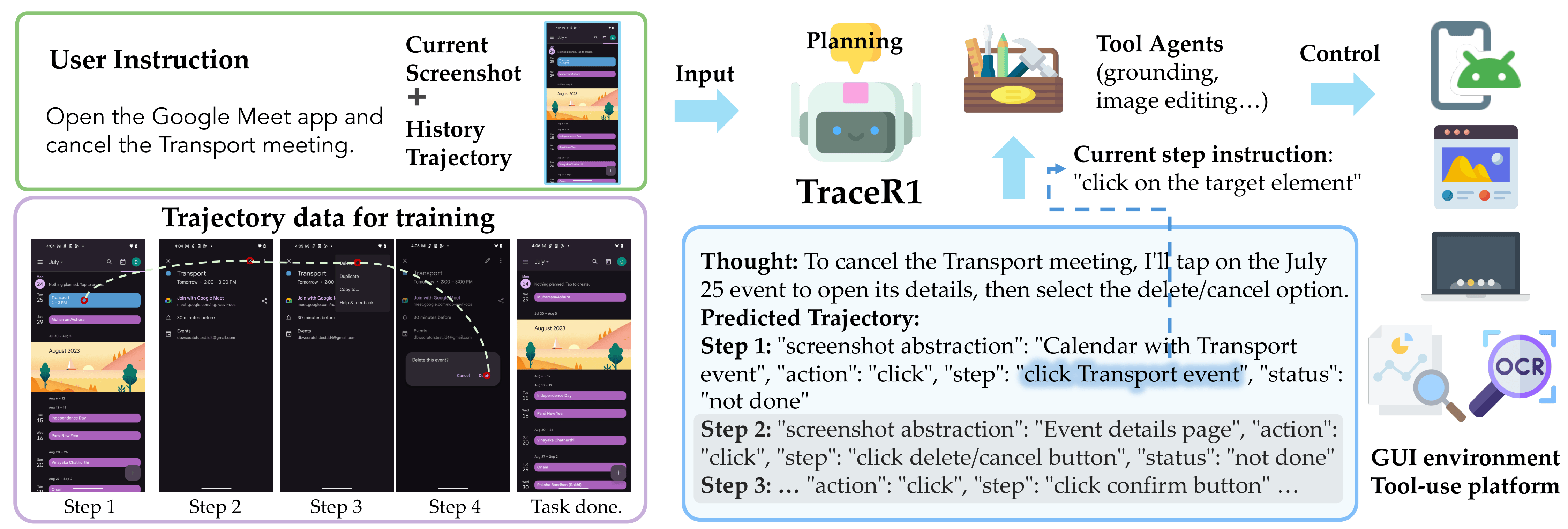

Agentic Large Multimodal Model

Anticipatory Planning for Multimodal Agents

Yongyuan Liang , Shijie Zhou, Yu Gu, Hao Tan, Gang Wu, Franck Dernoncourt, Jihyung Kil, Ryan A. Rossi, Ruiyi Zhang

CVPR Paper /

Twitter

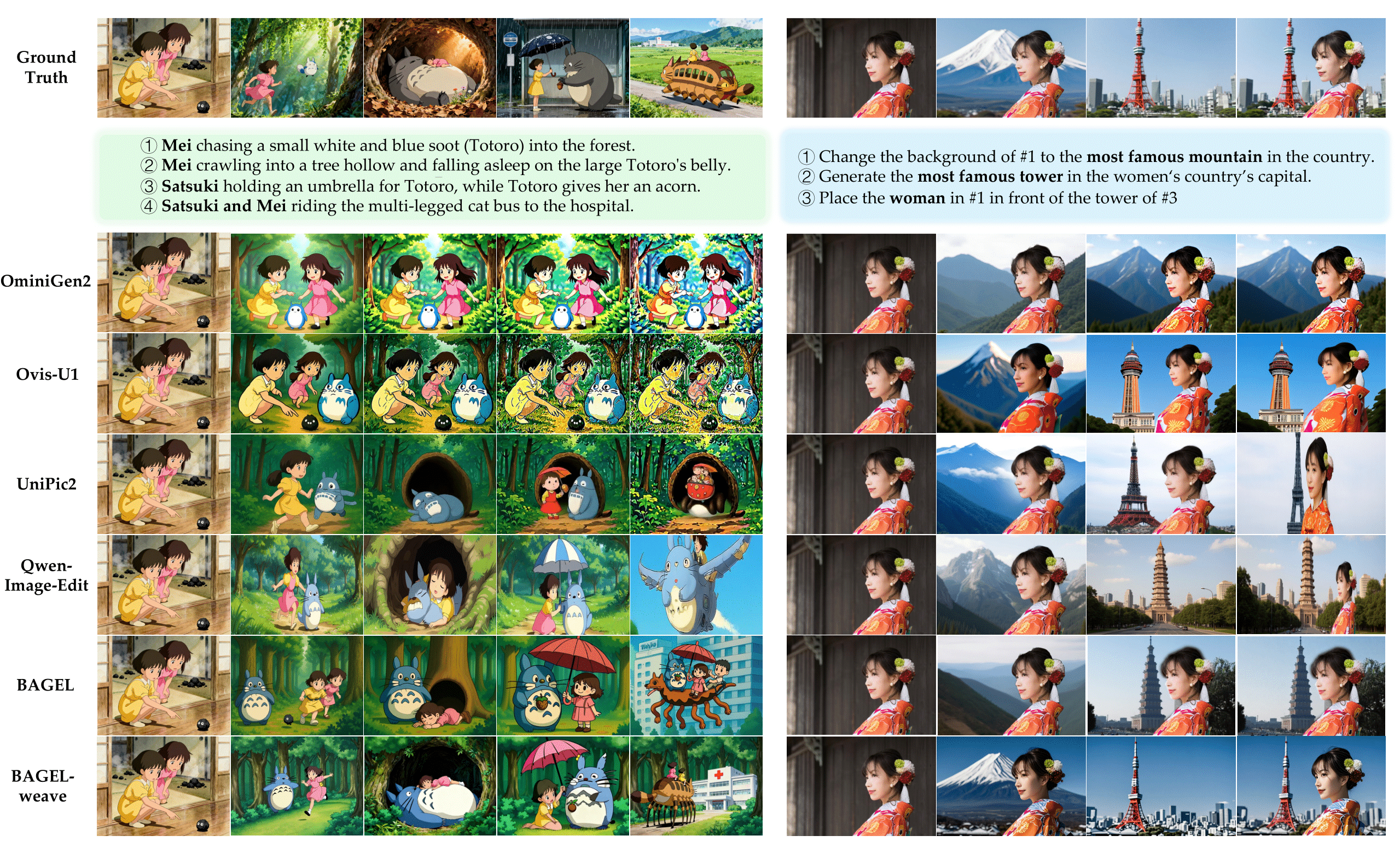

Unified Multimodal Model

WEAVE: Unleashing and Benchmarking the In-context Interleaved Comprehension and Generation

Yongyuan Liang , ..., Weijia Wu, Hanwang Zhang, Tat-Seng Chua, et al.

CVPR Project Page /

Paper /

Code /

Benchmark /

Twitter

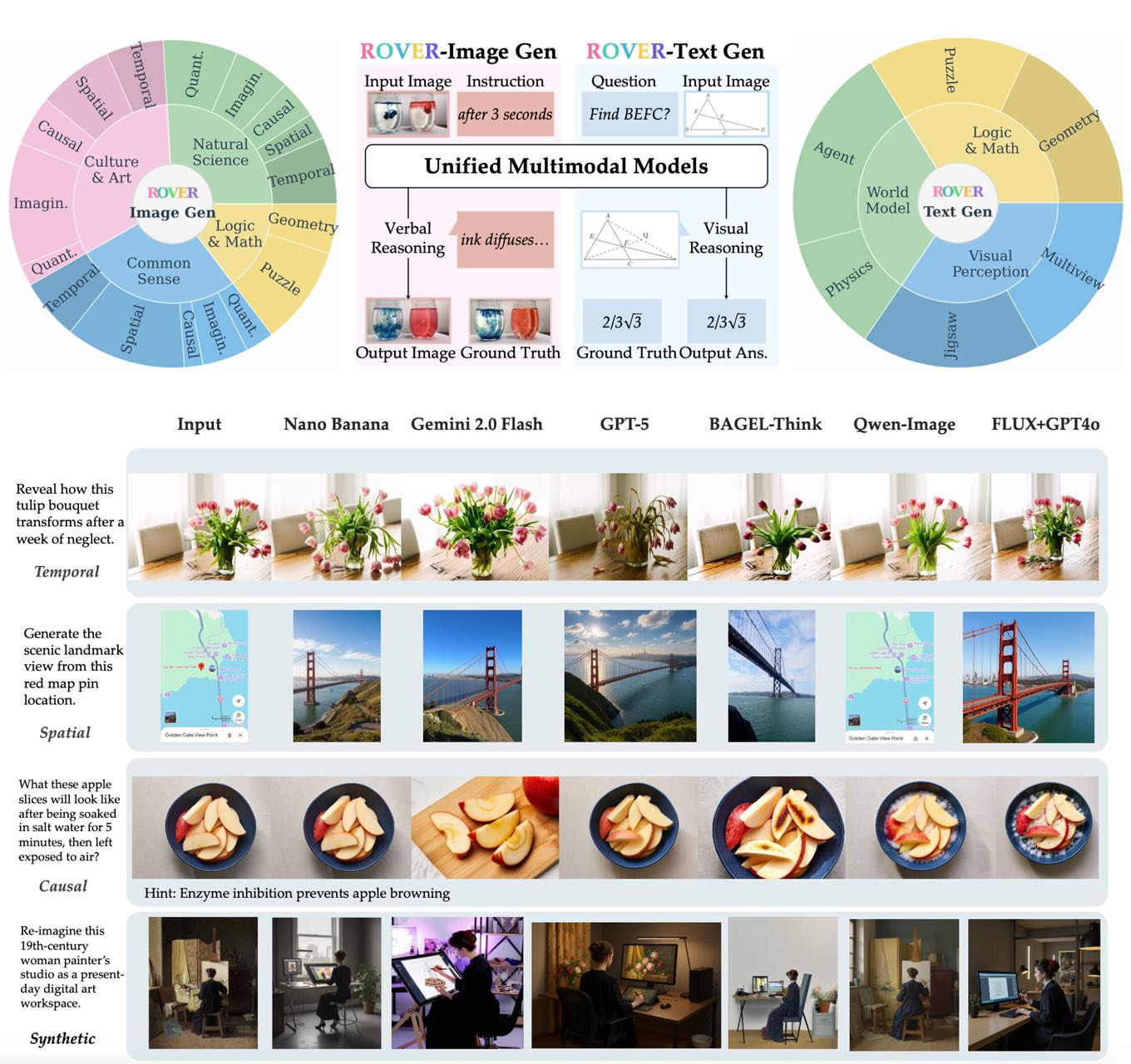

Unified Multimodal Model

ROVER: Benchmarking Reciprocal Cross-Modal Reasoning for Omnimodal Generation

Yongyuan Liang* , Wei Chow*, Feng Li, Ziqiao Ma, Xiyao Wang, Jiageng Mao, Jiuhai Chen, Jiatao Gu, Yue Wang†, Furong Huang†

ICLR Project Page /

Paper /

Code /

Benchmark /

Twitter

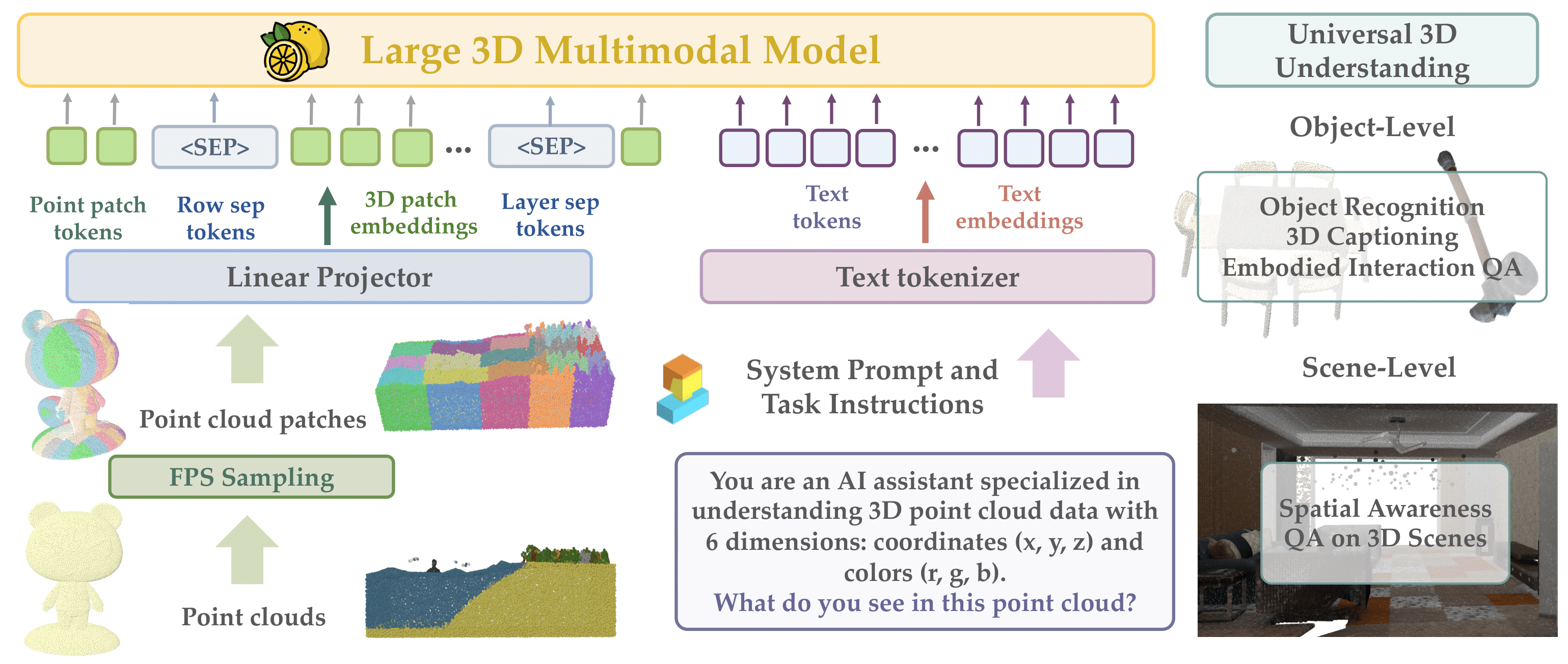

3D Large Multimodal Model

Lemon: A Unified and Scalable 3D Multimodal Model for Universal Spatial Understanding

Yongyuan Liang , Xiyao Wang, Yuanchen Ju, Jianwei Yang, Furong Huang

arXiv , 2025Spotlight Talks CVPR Workshop CVinW , 2025

Project Page /

Paper /

Code /

Models & Datasets /

Twitter

Agentic Large Multimodal Model

Magma: A Foundation Model for Multimodal AI Agents

CVPR Project Page /

Paper /

Code /

Models & Datasets /

Twitter

Embodied Large Multimodal Model

TraceVLA: Visual Trace Prompting Enhances Spatial-Temporal Awareness for Generalist Robotic Policies

Yongyuan Liang* , Shuaiyi Huang, Jianfeng Gao, Hal Daumé III, Andrey Kolobov, Furong Huang, Jianwei Yang

ICLR Oral Talks ICLR Workshop GenBot , 2025

Project Page /

Paper /

Code /

Models /

Twitter

Robots Pre-Train Robots: Manipulation-Centric Robotic Representation from Large-Scale Robot Datasets

Yongyuan Liang *†, Huazhe Xu†

ICLR Project Page /

Paper /

Code /

Models /

Twitter

Embodied Large Multimodal Model

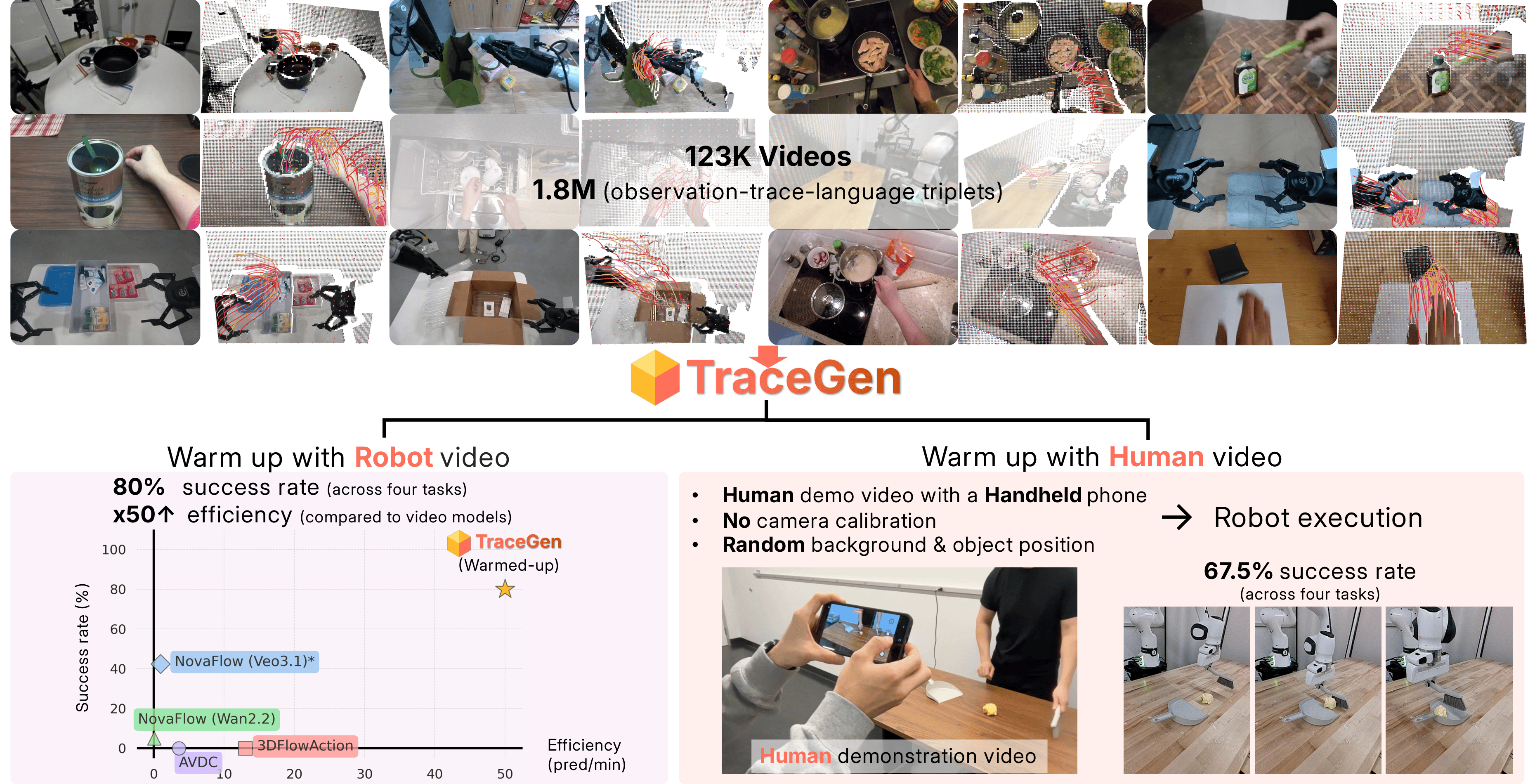

TraceGen: World Modeling in 3D Trace Space Enables Learning from Cross-Embodiment Videos

Yongyuan Liang , Jia-Bin Huang, Furong Huang

CVPR Project Page /

Paper /

Code /

Twitter

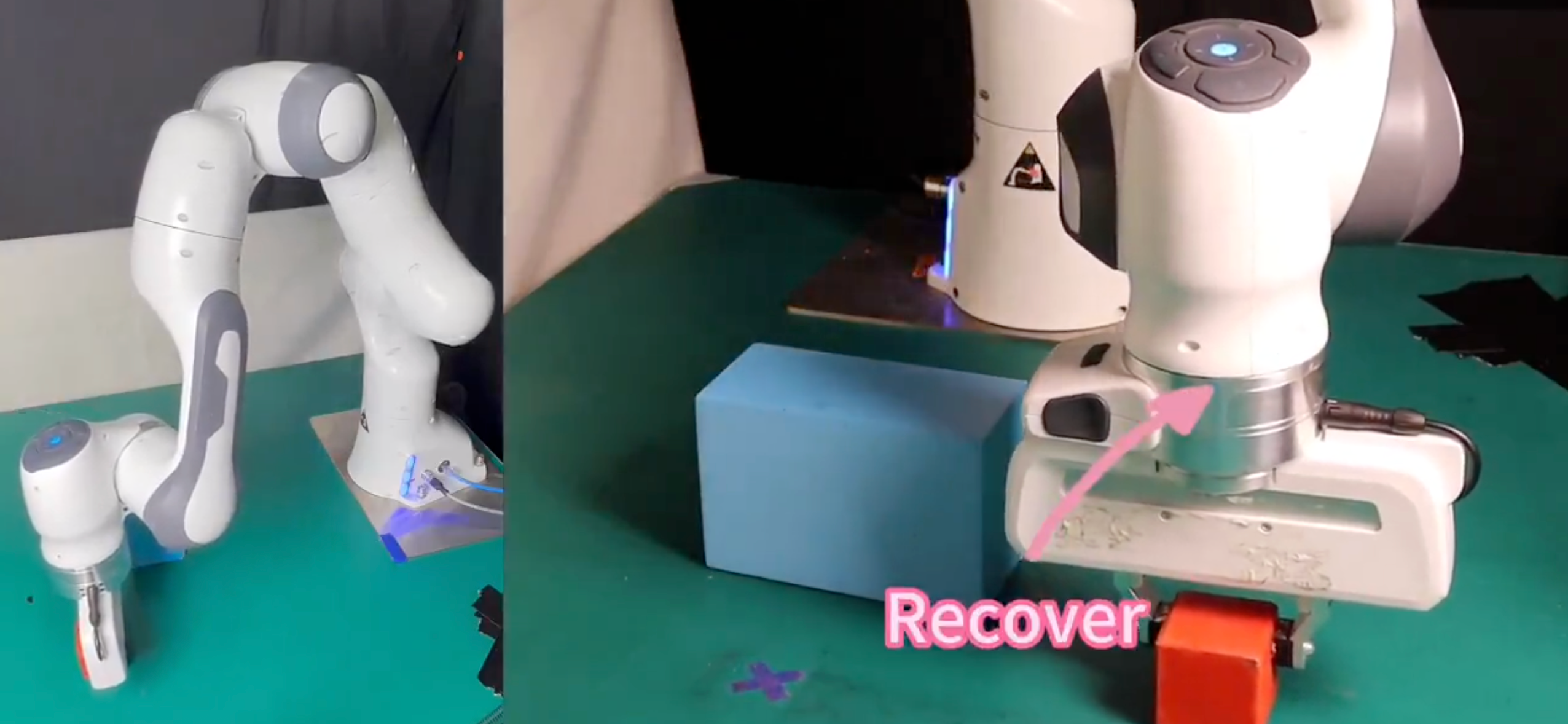

Reinforcement Learning

Failure-Aware RL: Reliable Offline-to-Online Reinforcement Learning

Yongyuan Liang , Bo An, Xiaoli Li, Huazhe Xu

ICRA Project Page /

Paper /

Code /

Twitter

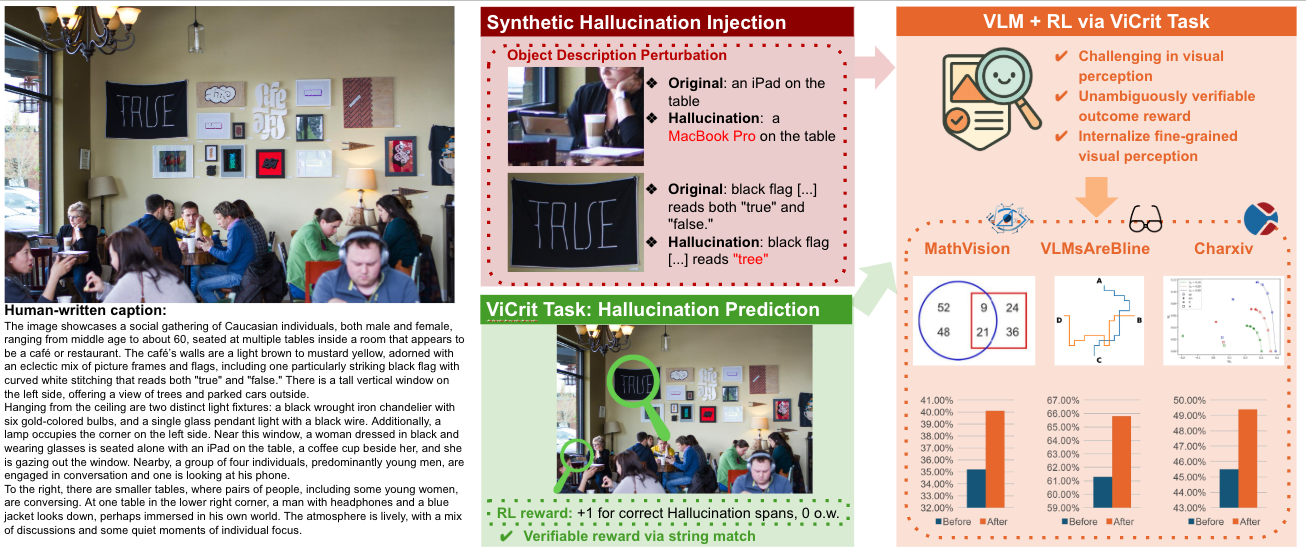

ViCrit: A Verifiable Reinforcement Learning Proxy Task for Visual Perception in VLMs

Yongyuan Liang , Yuhang Zhou, Xiaoyu Liu, Ziyi Zang, Ming Li, Chung-Ching Lin, Kevin Lin, Linjie Li†, Furong Huang†, Lijuan Wang†

NeurIPS Project Page /

Paper /

Code /

Models & Datasets /

Twitter

Generative Model

Make-An-Agent: A Generalizable Policy Network Generator with Behavior-Prompted Diffusion

Yongyuan Liang , Tingqiang Xu, Kaizhe Hu, Guangqi Jiang, Furong Huang, Huazhe Xu

NeurIPS Oral Talks NeurIPS Workshop AFM , 2024

Project Page /

Paper /

Code /

Models & Dataset /

Twitter

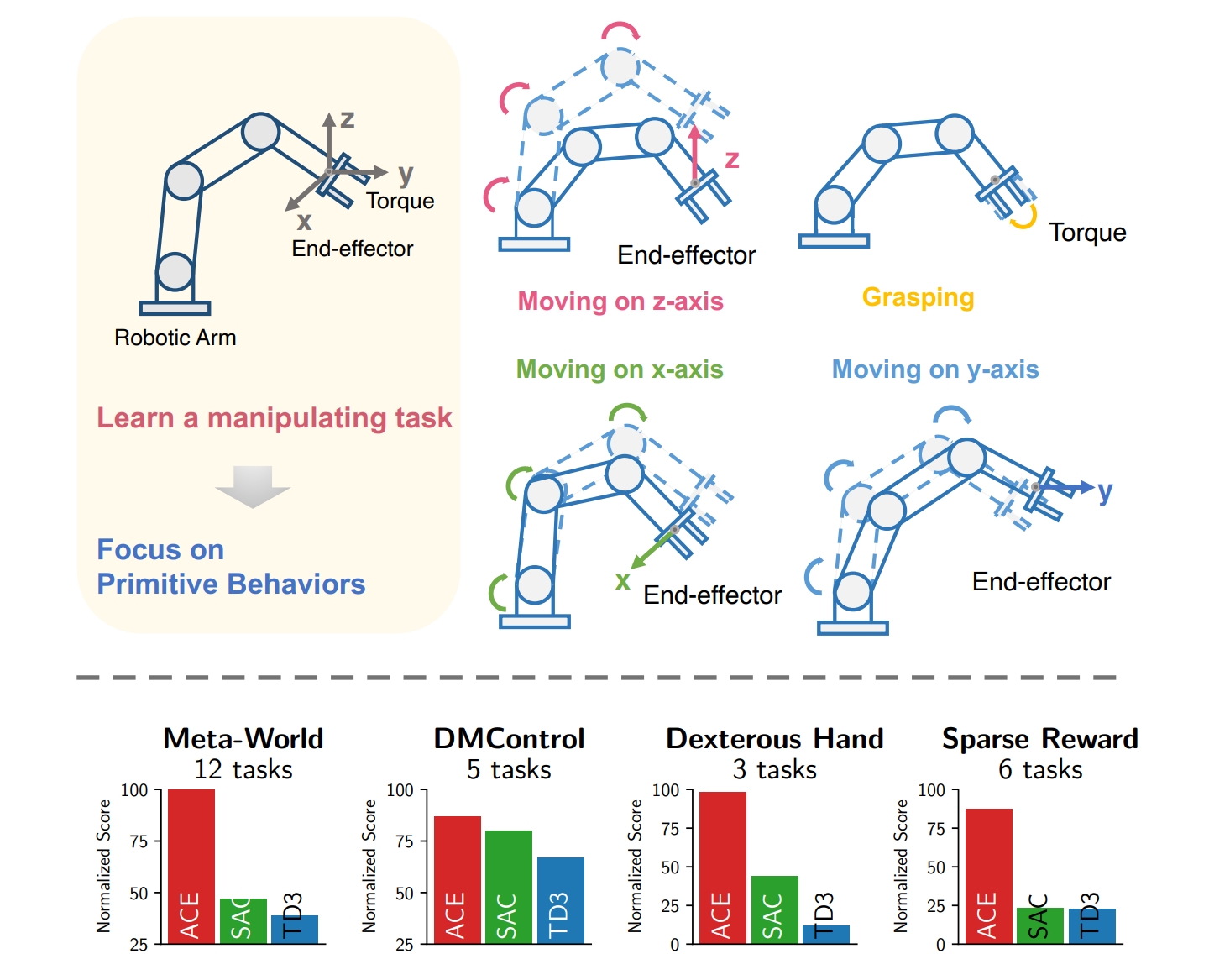

Reinforcement Learning

ACE: Off-Policy Actor-Critic with Causality-Aware Entropy Regularization

Yongyuan Liang* , Yan Zeng, Yu Luo, Guowei Xu, Jiawei Guo, Ruijie Zheng, Furong Huang, Fuchun Sun, Huazhe Xu

ICML Oral Project Page /

Paper /

Code /

Twitter

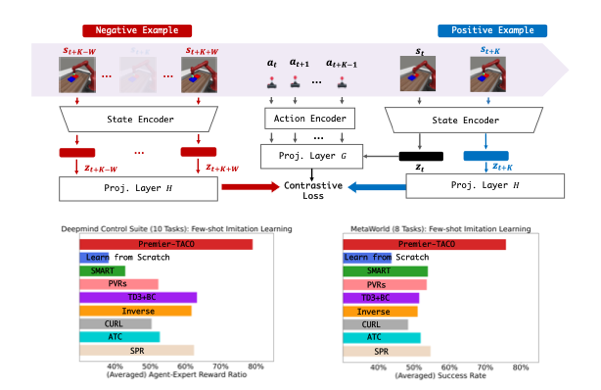

PREMIER-TACO is a Few-Shot Policy Learner: Pretraining Multitask Representation via Temporal Action-Driven Contrastive Loss

Yongyuan Liang , Xiyao Wang, Shuang Ma, Hal Daumé III, Huazhe Xu, John Langford, Praveen Palanisamy, Kalyan Basu, Furong Huang

ICML Project Page /

Paper /

Code /

Twitter

DrM: Mastering Visual Reinforcement Learning through Dormant Ratio Minimization

Yongyuan Liang* ,

Xiyao Wang, Zhecheng Yuan, Tianying Ji, Yu Luo, Xiaoyu Liu, Jiaxin Yuan, Pu Hua, Shuzhen Li, Yanjie Ze, Hal Daumé III, Furong Huang, Huazhe Xu

ICLR Spotlight, Top 5% Project Page /

Paper /

Code /

Twitter

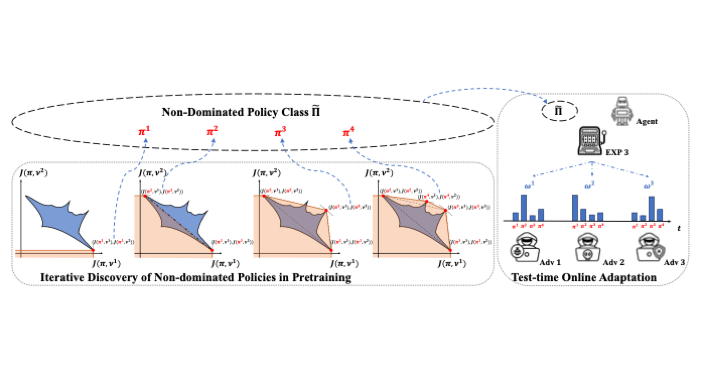

Beyond Worst-case Attacks: Robust RL with Adaptive Defense via Non-dominated Policies

Yongyuan Liang , Furong Huang

ICLR Spotlight, Top 5% Project Page /

Paper /

Code /

Twitter

Game-Theoretic Robust Reinforcement Learning Handles Temporally-Coupled Perturbations

Yongyuan Liang , Yanchao Sun, Ruijie Zheng, Xiangyu Liu, Benjamin Eysenbach, Tuomas Sandholm, Furong Huang, Stephen Marcus McAleer

ICLR Paper /

Twitter

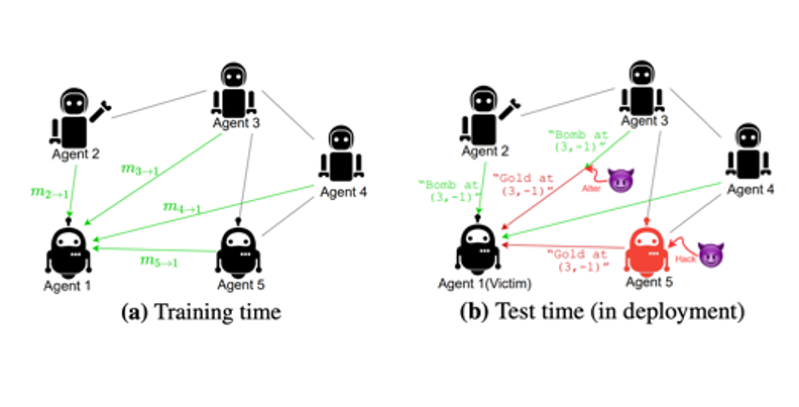

Certifiably Robust Policy Learning against Adversarial Communication in Multi-agent Systems

Yongyuan Liang , Soheil Feizi, Sumitra Ganesh, Furong Huang

ICLR Paper /

Code

Efficient Adversarial Training without Attacking: Worst-Case-Aware Robust Reinforcement Learning

Yongyuan Liang* , Yanchao Sun*, Ruijie Zheng, Furong Huang

NeurIPS Spotlight Talks NeurIPS Workshop SafeRL , 2021

Paper /

Code /

Slides

Who Is the Strongest Enemy? Towards Optimal and Efficient Evasion Attacks in Deep RL

Yongyuan Liang , Furong Huang

ICLR Best Paper Award NeurIPS Workshop SafeRL , 2021

Project Page /

Paper /

Code

Blogs

Professional Service

Misc

If my name is a bit tricky to pronounce for you, I’d love to go by Cheryl [ˈʃerəl].

Classic INTJ-A.

I've been playing the violin🎻 for over 15 years and served as Principal First Violin in the university orchestra. I also play the piano.

I enjoy reading Japanese and Western literature. Here's some of my reading notes .

Been a fan of Novak Djokovic since 2012.

My Erdős number = 4 .

Marginalia 🍊

🍦

© Yongyuan Liang. All rights reserved for content and custom design.

Jon Barron.